Research Projects

- Localization and Mapping for Autonomous Driving

- NVIDIA's lidar-free autonomous driving is powered by my visual localization work. Check out the demo videos!

- Related publications:

- " Monocular Localization in Urban Environments using Road Markings", IEEE Intelligent Vehicles Symposium (IV), 2017

- RGB-D SLAM Using Line Features

- Large lighting variation challenges all visual odometry methods, even with RGB-D cameras. Line segments are abundant indoors and less sensitive to lighting change than point features. We propose a line segment-based RGB-D indoor odometry algorithm robust to lighting variation. We also investigate fusing point and line features for RGB-D SLAM/odometry. Project Website

- Related publications:

- " Robustness to Lighting Variations: An RGB-D Indoor Visual Odometry Using Line Segments", IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2015

- " Robust RGB-D Odometry Using Point and Line Features", IEEE International Conference on Computer Vision (ICCV), 2015

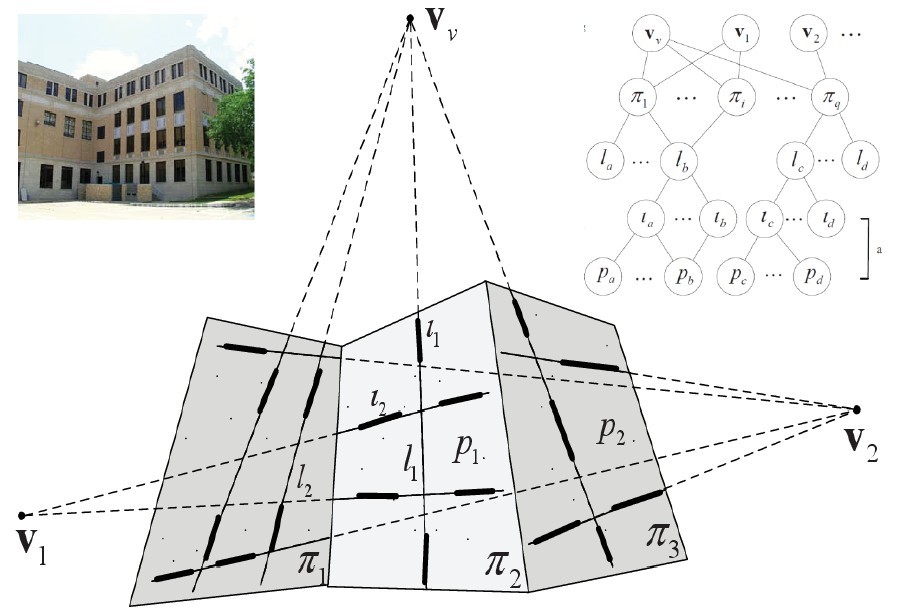

- Multilayer Feature Graph for Robot Navigation

- We design a multilayer feature graph (MFG) to facilitate scene understanding and robot navigation in urban areas. Nodes of an MFG are features such as SIFT points, line segments, lines, and planes while edges of the graph represent different geometric relationships such as adjacency, parallelism, collinearity, and coplanarity. Project Website

- Related publications:

- "A Two-View based Multilayer Feature Graph for Robot Navigation", IEEE International Conference on Robotics and Automation (ICRA), 2012

- "Automatic Building Exterior Mapping Using Multilayer Feature Graphs", IEEE International Conference on Automation Science and Engineering (CASE), 2013

- "High Level Landmark-Based Visual Navigation Using Unsupervised Geometric Constraints in Local Bundle Adjustment", IEEE International Conference on Robotics and Automation (ICRA), 2014

- "Visual Navigation Using Heterogeneous Landmarks and Unsupervised Geometric Constraints", IEEE Transactions on Robotics (T-RO), 2015

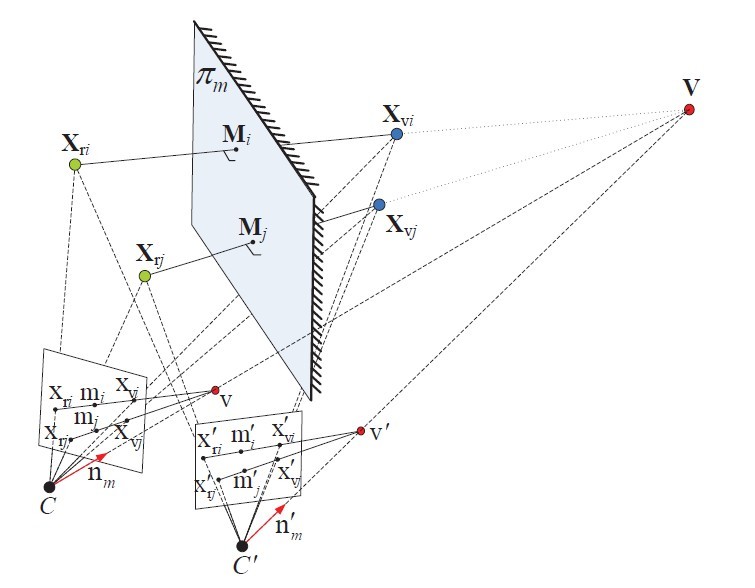

- Robust Recognition of Planar Mirrored Walls

- Mobile robots need to recognize objects at their vicinity for navigation and safety purposes. However, highly reflective surfaces, such as glassy building exterior and mirrored walls, challenge almost every type of sensors including laser range finders, sonar arrays, and cameras because light and sound signals simply bounce off the surfaces. Therefore, such surfaces are often invisible to the sensors. Detecting these surfaces is necessary to avoid collisions. In this project, we develop algorithms for detecting planar mirrored walls based on two views from an on-board camera.

- Related publications:

- "Automatic Recognition of Spurious Surface in Building Exterior Survey", IEEE International Conference on Automation Science and Engineering (CASE) , 2013

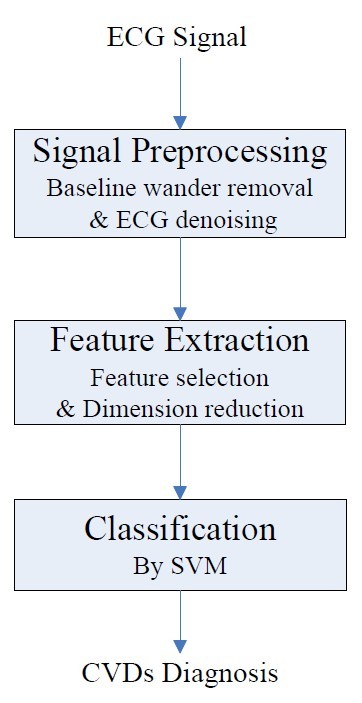

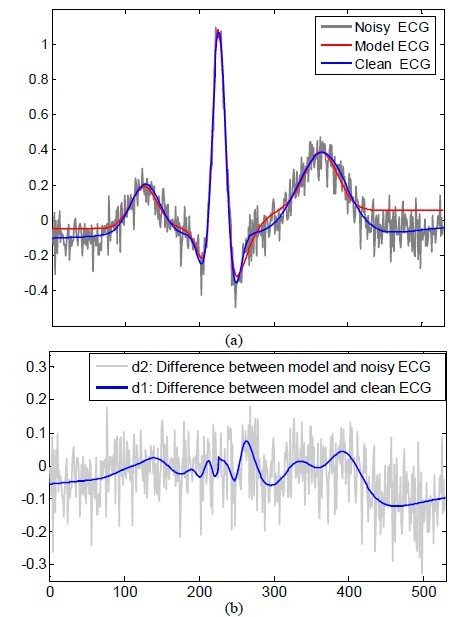

- ECG Signal Processing and Pattern Analysis

- Related publications:

- "Model-based ECG Denoising Using Empirical Mode Decomposition", IEEE International Conference on Bioinformatics and Biomedicine (BIBM), 2009

- "Model-based Feature Extraction of Electrocardiogram Using Mean Shift", International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 2009

- " Intelligent Diagnosis of Cardiovascular Diseases Utilizing ECG Signals", International Journal of Information Acquisition, 2010

- Vision-based Robotic Pen-and-Ink Drawing

- Related publications:

- "Preliminary Study on Vision-based Pen-and-Ink Drawing by a Robotic Manipulator", IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), 2009. [ppt]

Course Projects

- CSCE666 Pattern Analysis, 2011 fall

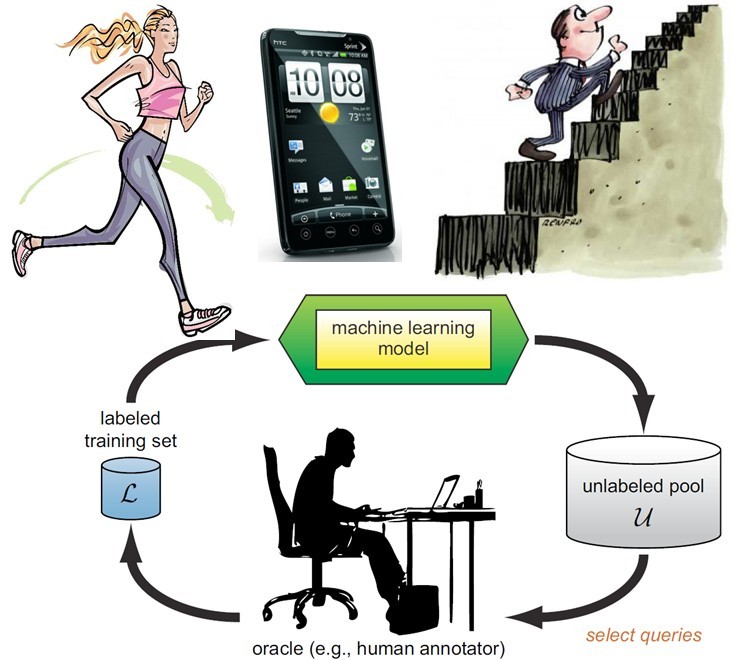

- Active Learning on Smartphone based Human Activity Recognition

- In this project, we design a robust human activity recognition system based on a smartphone. The system uses a built-in smartphone accelerometer as the only sensor to collect signals for classification. Active learning algorithms are exploited to reduce the labor and time expense of labeling tremendous data.

- Project Report:

- Amin Rasekh, Chien-An Chen, and Yan Lu. "Human Activity Recognition using Smartphone", 2011. [ppt]